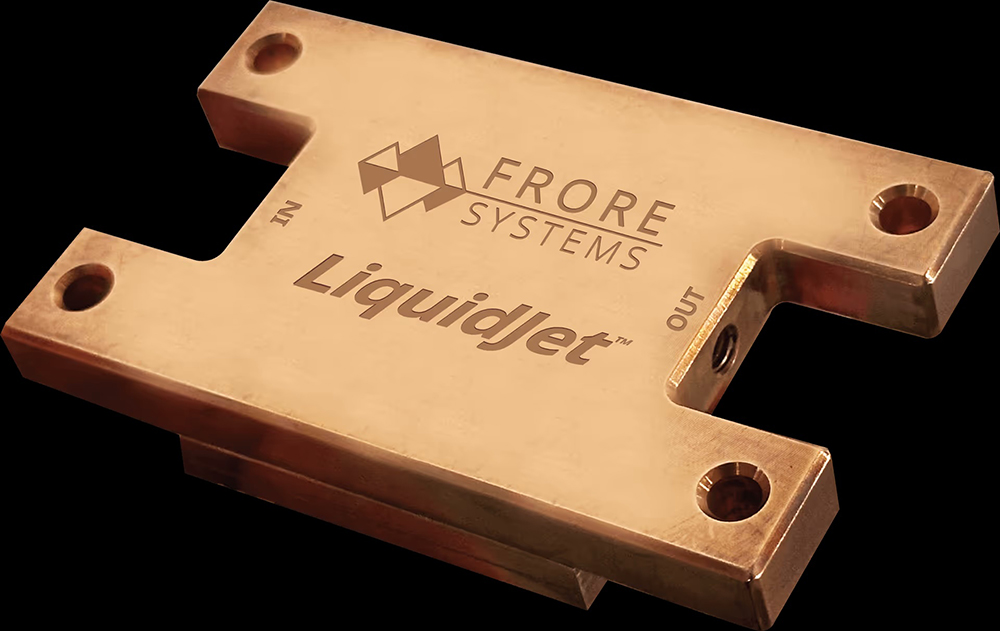

Avicena has demonstrated a functional microLED-based optical link with transmitter power consumption of 200 femtojoules per bit (fJ/bit) and a raw bit error rate below 1E-12, according to an announcement timed with ECOC 2025. The company claims this development, featuring its scalable LightBundle platform for data center and AI workloads, offers significant reductions in optical link power versus traditional laser-based approaches.

The live demonstration utilized a high-sensitivity receiver derived from high-volume camera sensor processes. By using an optimized camera sensor as the receiver and leveraging the low threshold current of microLEDs, the optical data link operated with drive currents near 0.25 mA and a data rate of 4 Gb/s. The setup paired the microLED with a hybrid-bonded camera sensor and a discrete transimpedance amplifier.

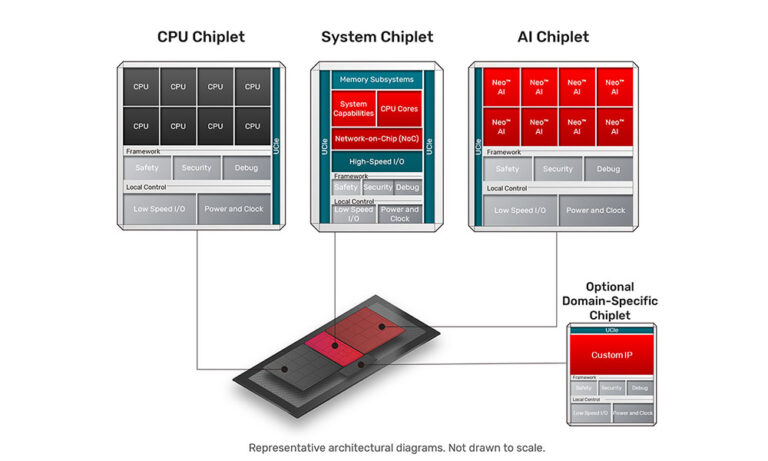

Avicena describes the LightBundle chiplet transceivers as capable of transmitting low-speed, raw parallel data—bypassing the typical serialization into higher-speed lanes. This design enables dense microLED arrays to deliver high aggregate bandwidth while maintaining low latency and minimal power consumption, and is compatible with integration methods such as co-packaged optics, on-board optics, pluggable modules, and wide memory interfaces. Avicena explicitly targets high-performance computing and AI data center platforms as the primary application for this optical interconnect.

“By leveraging a highly sensitive receiver, a minor modification to a high-volume camera process, and the unique properties of microLEDs, we can achieve unmatched energy efficiency in our LightBundle interconnects,” said Bardia Pezeshki, CTO of Avicena. “This breakthrough shows how microLED technology can replace legacy laser-based links with a simpler, more scalable, and far lower power solution.”

“The implications of this innovation extend beyond GPU-to-GPU connectivity,” said Marco Chisari, CEO of Avicena. “HBM memory interfaces are one of the next great frontiers for optical interconnects and ideally require wide buses with minimal latency. LightBundle’s ability to directly transmit low-speed parallel data at very low power and low latency, makes it ideally suited to breaking traditional memory bandwidth bottlenecks, and opening the door to new system architectures for next-generation AI and HPC platforms.”

Avicena notes it is working with hyperscale data center operators on scale-out GPU clusters and low-power memory interfaces, aiming to support expansion to systems spanning multiple racks and thousands of GPUs with reduced power requirements.

Source: Avicena