Nvidia has announced the Nvidia Blackwell Ultra GPU, an advanced addition to its Blackwell architecture lineup. The new GPU targets large-scale, real-time artificial intelligence (AI) data center deployments and “AI factories,” integrating silicon-level innovation with system-level features to deliver higher compute density, memory bandwidth, and energy efficiency.

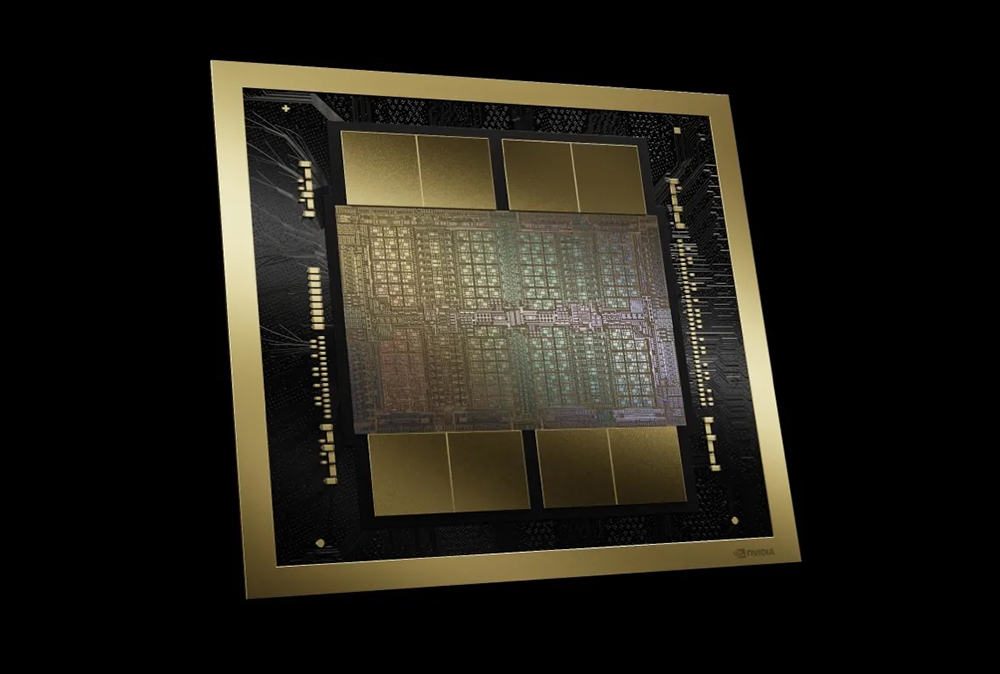

Blackwell Ultra adopts a dual-reticle design with two reticle-sized dies linked via Nvidia’s custom High-Bandwidth Interface (NV-HBI) for 10 TB/s die-to-die bandwidth. The device is fabricated using TSMC’s 4NP process, containing 208 billion transistors—2.6 times more than the previous Nvidia Hopper GPU. Nvidia reports a unified compute domain of 160 streaming multiprocessors (SMs) distributed across eight clusters. Each SM includes 128 CUDA Cores, four fifth-generation Tensor Cores, 256 KB of Tensor Memory, and dedicated math units for AI workloads.

The GPU’s HBM3E memory subsystem offers 288 GB per device—3.6 times the capacity of Hopper H100—with eight stacks and a combined bandwidth of 8 TB/s. Blackwell Ultra’s memory system is built to handle multi-trillion-parameter models, extending model context and supporting high concurrency without offloading key-value caches.

Nvidia highlights dense AI compute: the upgraded fifth-generation Tensor Cores support the new NVFP4 4-bit floating-point format. NVFP4 enables near-FP8 accuracy with a memory footprint reduction of up to 3.5 times versus FP16, and the architecture delivers up to 15 petaFLOPS of dense NVFP4 performance—a 1.5 times boost over the base Blackwell GPU and 7.5 times improvement over Hopper. Key compute operations for transformer-based attention models are accelerated by doubling the throughput of special function units for relevant mathematical operations, reportedly supporting up to 10.7 tera-exponentials per second.

System integration is advanced by support for Nvidia NVLink 5, providing 1.8 TB/s bidirectional GPU-to-GPU bandwidth (per GPU) and 576-GPU non-blocking topologies, as well as PCI Express Gen 6 (256 GB/s bidirectional) and NVLink-C2C for Grace CPU integration. Blackwell Ultra powers multiple data center system builds, including the GB300 NVL72 rack-scale system (1.1 exaFLOPS dense FP4 compute), rack-integrated Grace Blackwell Ultra Superchips (two GPUs plus one Grace CPU with 1 TB shared memory), and standardized 8-GPU Nvidia HGX/DGX B300 nodes. Power requirements for the GPU reach up to 1,400 W.

Operational features target multi-tenant cloud deployments, with the inclusion of advanced scheduling, work partitioning (Multi-Instance GPU), hardware root-of-trust, Trusted Execution Environment with inline NVLink protection, and Nvidia’s AI-driven reliability, availability, and serviceability engine. The product integrates fixed-function video, JPEG decoding, and 800 GB/s hardware decompression, offloading AI preprocessing pipelines directly to the GPU. Blackwell Ultra remains compatible with the established CUDA programming model and supports recent AI frameworks, distributed scheduling, and all relevant Nvidia developer libraries.

Source: Nvidia